Table of content

SHARE THIS ARTICLE

Is this blog hitting the mark?

Contact Us

Table of Contents

- Phase 1 – Requirement Gathering

- Phase 2 – Design

- Phase 3 – Development

- Phase 4 – Testing (Validation / Verification)

- Phase 5 – Maintenance / Production Support

- Highlight: Testing Types & What They Uncover

- Final Thoughts

Quality isn’t an afterthought. It’s embedded in what we test, when we test, and how we test. Over my 10+ years as a Test Automation Architect and now through scaling QAble, I’ve seen projects fail because testing started too late or was too generic. This playbook maps the lifecycle phases of software (Requirements → Design → Development → Testing → Maintenance) to what kinds of testing you should apply—and when. It also offers a sharp reference of testing types and the issues each uncovers.

Use this as both a blog for the QAverse community and as an operational guide for your QA teams.

Phase 1 – Requirement Gathering

Objective

Ensure that we capture the right requirements: clear, testable, tied to business value and risk.

Key Test Activities

- Conduct requirements reviews, walkthroughs, or inspections (static testing).

- Define acceptance criteria up front—“Given/When/Then” style or equivalent.

- Build a traceability matrix: requirement → acceptance criteria/test objective.

- Perform risk-tagging: high/medium/low based on business impact and probability.

Implementation Tips

- Pull BA/PM, Dev Lead, QA Lead into one session early. QA drives the checklist: “Is this requirement unambiguous? Testable? Non-functional aspects included?”

- Use your test-management tool (Jira, TFS, etc) to link requirements to test cases later.

- Maintain a risk log so that later phases can prioritize accordingly.

Example

Suppose you’re building a “User Onboarding with MFA” feature. During requirement gathering:

- You check: Does MFA specify methods (SMS, email, authenticator app)? What are failure paths? What’s expected performance

- Acceptance criteria defined: “When user enables MFA, subsequent login must prompt second factor.” “If second factor invalid, show error code XYZ.”

- Risk tag: High — because security and user experience are critical here.

Also Read: How to Use Playwright Trace Viewer for Faster Debugging

Phase 2 – Design

Objective

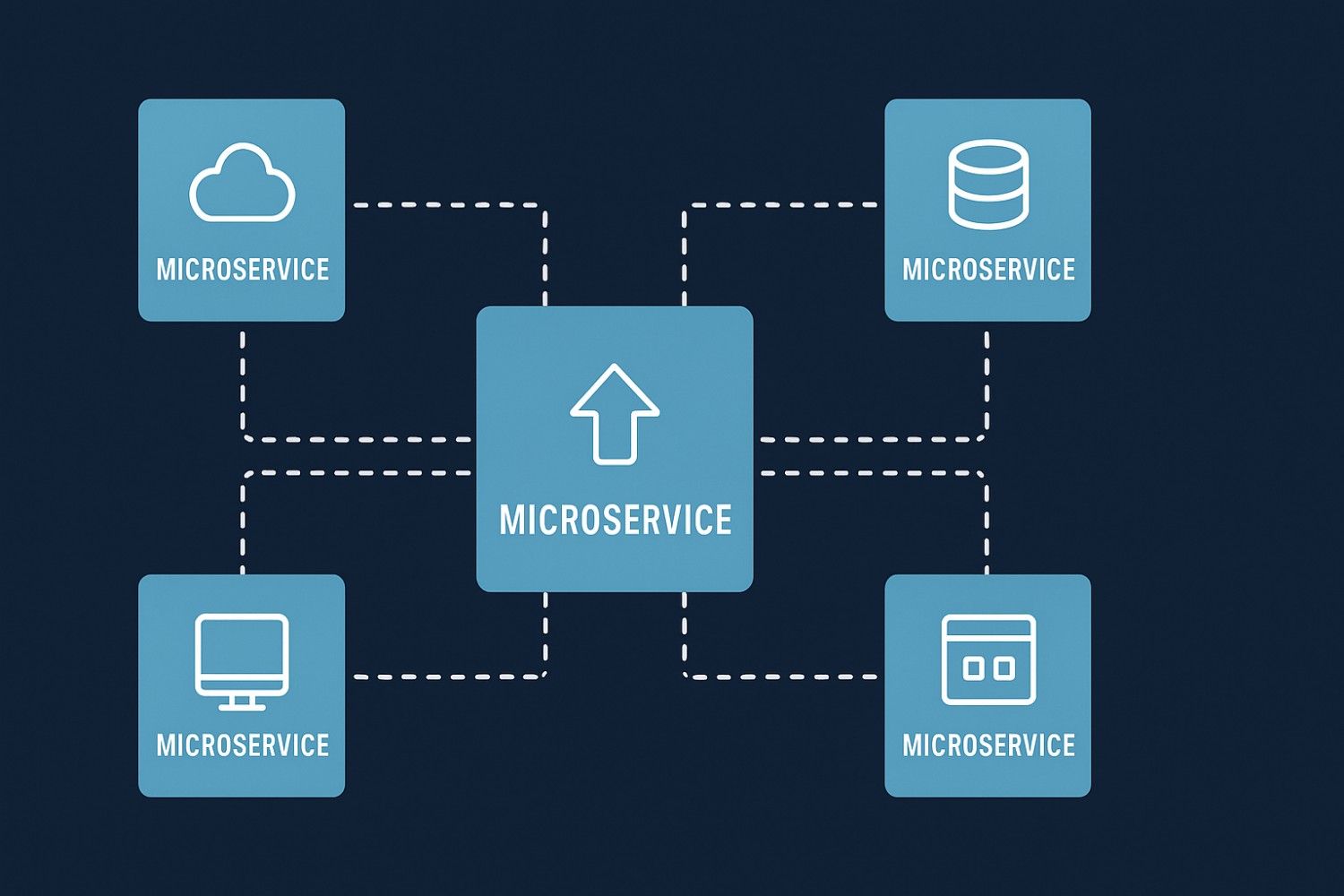

Validate that the architecture, interfaces, and modules are aligned with requirements, testable, and built for maintainability and quality.

Key Test Activities

- Design/architecture review sessions: QA + Architect + Devs.

- Testability review: Ask “How will we test this?” early.

- Define module-wise testing strategy: unit tests, integration tests, API tests, UI tests.

- Plan non-functional testing for modules (performance, security, usability) at design time.

Implementation Tips

- Have design artefacts (diagrams, interface contracts, data flows) ready for QA to review.

- Map modules to test strategy: e.g., “Token service → unit+API tests; UI onboarding → UI tests + usability review.”

- Identify automation approach early: which frameworks, test data strategy, environment needs.

Example

For our MFA module: design includes modules—Registration service, MFA service, Token generation, SMS/email gateway. QA asks: Is interface contract for Token generation defined? How will we stub SMS gateway in test environment? Testing strategy defined accordingly.

Phase 3 – Development

Objective

Deliver code that meets design and requirements, with built-in quality and minimal defects. Early testing (unit, integration) matters most here.

Key Test Activities

- Unit testing by developers (with coverage targets).

- Static code analysis / linting / code reviews embedded in CI.

- Early integration tests (modules interacting).

- Shift-left: QA participates by identifying integration touch-points and defining “smoke tests” early.

Implementation Tips

- Enforce coding standards and unit test coverage thresholds for critical modules.

- Configure CI pipelines: on each commit run unit + static analysis + selected integration smoke tests.

- QA ensures test hooks exist (APIs, test data, stubs) so automation is feasible.

Example

Token service code is committed. Developers write unit tests around edge cases (token expiry, QAble’s QA Playbook aligns every software lifecycle phase with the right testing types. It’s a practical guide to test smarter—knowing what, when, and why to test—so teams can build quality early, cut defects, and ship release-ready software faster.replay attacks). Static analysis catches hard-coded secret. QA defines a CI smoke: user registration → token generated → MFA login flows in <2 s.

Phase 4 – Testing (Validation / Verification)

Objective

Test the system end-to-end: functional behaviour, regressions, non-functional qualities (performance, security, usability). Make sure it’s release-ready.

Key Test Activities

- Build test plan & strategy: link requirements → acceptance criteria → test cases.

- Focus on risk-based prioritisation: test high-risk features first.

- Execute functional tests (happy path, edge, negative flows).

- Execute non-functional tests: performance, security, usability, compatibility.

- Maintain and run regression suite as fixes roll in.

- Conduct UAT (user-acceptance) with stakeholders if applicable.

Implementation Tips

- Set up test environment mirroring production (config, data, integrations).

- Maintain automation suite for API, UI, regression. Use manual exploratory testing for unknown/complex areas.

- Track metrics: defect counts, test coverage, automation coverage, test execution time, mean time to fix.

Example

On the MFA module: test plan covers registration, MFA challenge/response, failure paths, recovery flows, SMS/email gateway integration, multi-device support. Non-functional tests: MFA system must handle 5,000 concurrent users; token service must pass security scan. Defects such as “on email gateway failure onboarding blocks without error” are logged and fixed. Regression suite updated. After all high/critical defects fixed, UAT signed-off by stakeholders.

Also Read: Are We Truly Covered? Unseen Risks in Software Testing

Phase 5 – Maintenance / Production Support

Objective

Ensure the live system remains stable, responsive to change, and quality stays high as you evolve and patch.

Key Test Activities

- For every change/patch/hot-fix: update regression suite + run key automated tests before deployment.

- Post-deployment smoke/sanity tests and monitoring of production logs/metrics (error-rates, performance, security alerts).

- Regular regression cycle (daily/weekly) for major systems; periodic non-functional testing if usage or environment changes.

- Defect triage: root-cause analysis for production bugs → update test suites accordingly.

Implementation Tips

- Maintain a clear process for patch releases: staging environment, smoke tests, pre-go-live checks.

- Use production monitoring tools (APM, log monitoring) to detect issues early.

- Feed back production defects into test-suite improvements and process refinements (continuous improvement).

Example

A patch adds “Authenticator App” to MFA. Regression suite is updated. Automated UI+API tests run pre-deployment. Post-deployment smoke test of registration + MFA flows passes. Monitoring shows no critical alerts for 48 hours. Test suite now includes new flow to guard against future regression.

Also Read: Top 10 Accessibility Testing Tools

Highlight: Testing Types & What They Uncover

Here’s a separate reference you can embed in your blog or share with your QA teams

Final Thoughts

In an era where software is delivered faster and user expectations are higher, quality is no longer optional—it’s a strategic differentiator. Internal teams that adopt a structured, phase-aligned testing playbook gain clarity, reduce risk, and deliver consistently. This blog isn’t just a checklist; it’s a blueprint for building that rhythm of quality. Use it to sharpen your process, empower your team, and establish credibility in your space. When you bring rigor, traceability, automation and risk-based focus into your testing lifecycle, you don’t just catch bugs—you build trust.

If you’re seeking to lift your QA game—whether you’re a startup scaling fast or an enterprise navigating complex releases—QAble brings hands-on expertise and tailored testing services that complement this playbook. We help you:

- Define and embed a robust testing strategy that aligns with your lifecycle.

- Build automation frameworks and continuous-testing pipelines that reduce manual load and time-to-feedback.

- Focus on high-risk areas and deliver measurable QA metrics that tie to business outcomes.

- Scale QA operations flexibly (without ballooning headcount) and integrate with your Dev/Ops practices.

You’re welcome to use the playbook above as your reference. And if you need a partner to operationalise it, QAble is ready to step in and accelerate your quality-journey.

Discover More About QA Services

sales@qable.ioDelve deeper into the world of quality assurance (QA) services tailored to your industry needs. Have questions? We're here to listen and provide expert insights

Viral Patel is the Co-founder of QAble, delivering advanced test automation solutions with a focus on quality and speed. He specializes in modern frameworks like Playwright, Selenium, and Appium, helping teams accelerate testing and ensure flawless application performance.

.svg)

.webp)

.webp)

.png)

.png)

.png)

.png)

.png)

.png)

.jpg)

.png)

.webp)